Abstract

The multiplane images (MPI) has shown great promise as a representation for efficient novel view synthesis. In this work, we present a new MPI-based approach for real-time novel view synthesis of monocular videos. We first formulate a new representation, referred to as layered MPI (LMPI), to reduce the number of parameters in MPI and make it suitable for videos. Then we propose a pipeline that generates sequence of temporally consistent LMPI using a single monocular video as input. The pipeline exploits the information from multiple frames, does not require any camera pose information, and can generate compelling layered multiplane video results. Experiments validate that our framework achieves better visual quality than several baselines and is capable of interactive novel view synthesis during video playback.

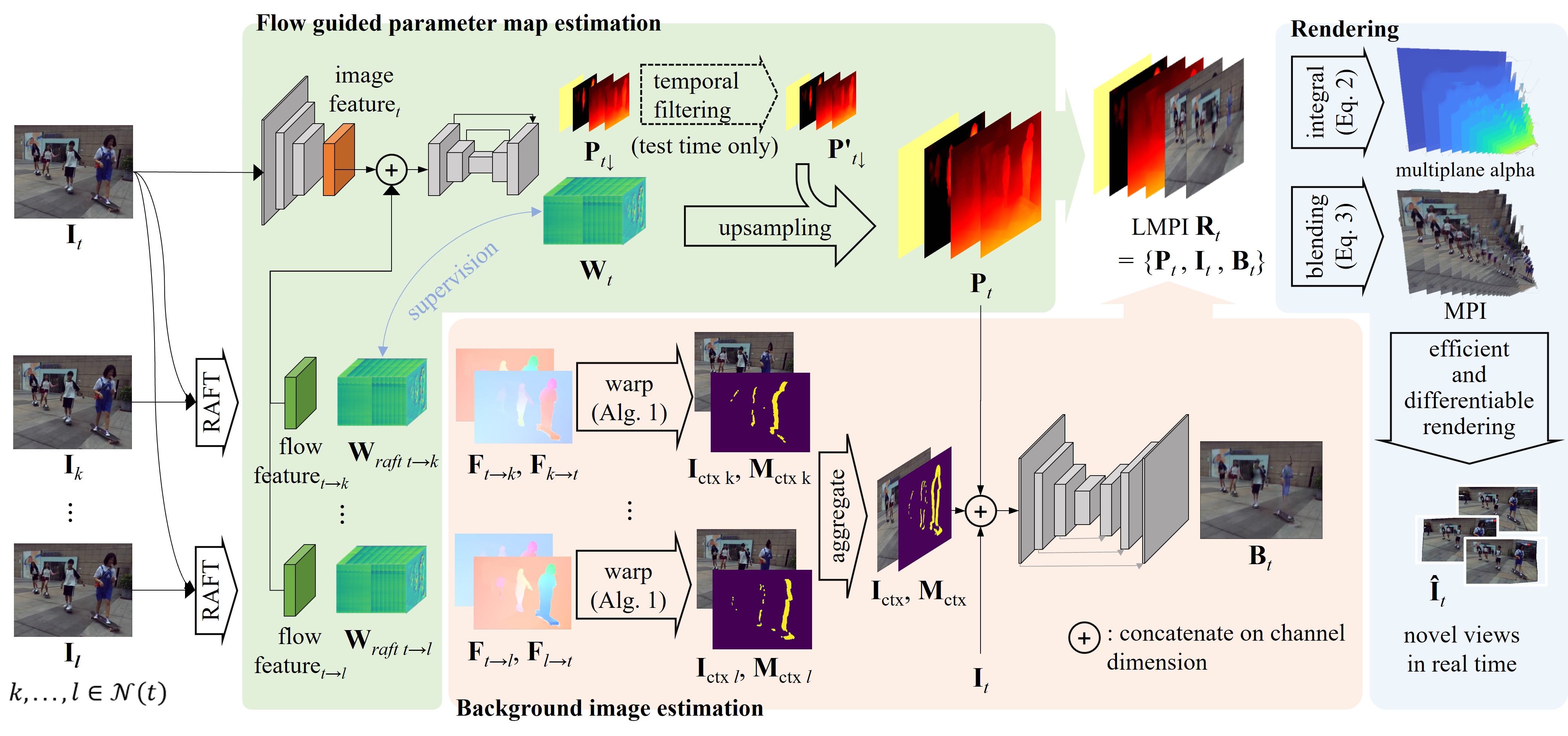

Pipeline

For each frame, we operate on a local time window, and generate LMPI for rendering. LMPI consists of a parameter Pt that describe the scene geometry, and foreground / background image It / Bt that stores the scene appearance. The pipeline consists of two modules that generate parameter map and background image respectively. Parameter map estimation module (green) first predicts a coarse parameter map and an upsampling weight. The coarse parameter map is then upsampled to get final fine parameter map. Background estimation module (red) first aggregates context image and context mask from neighbor frames, then use U-Net to generate the final background image.