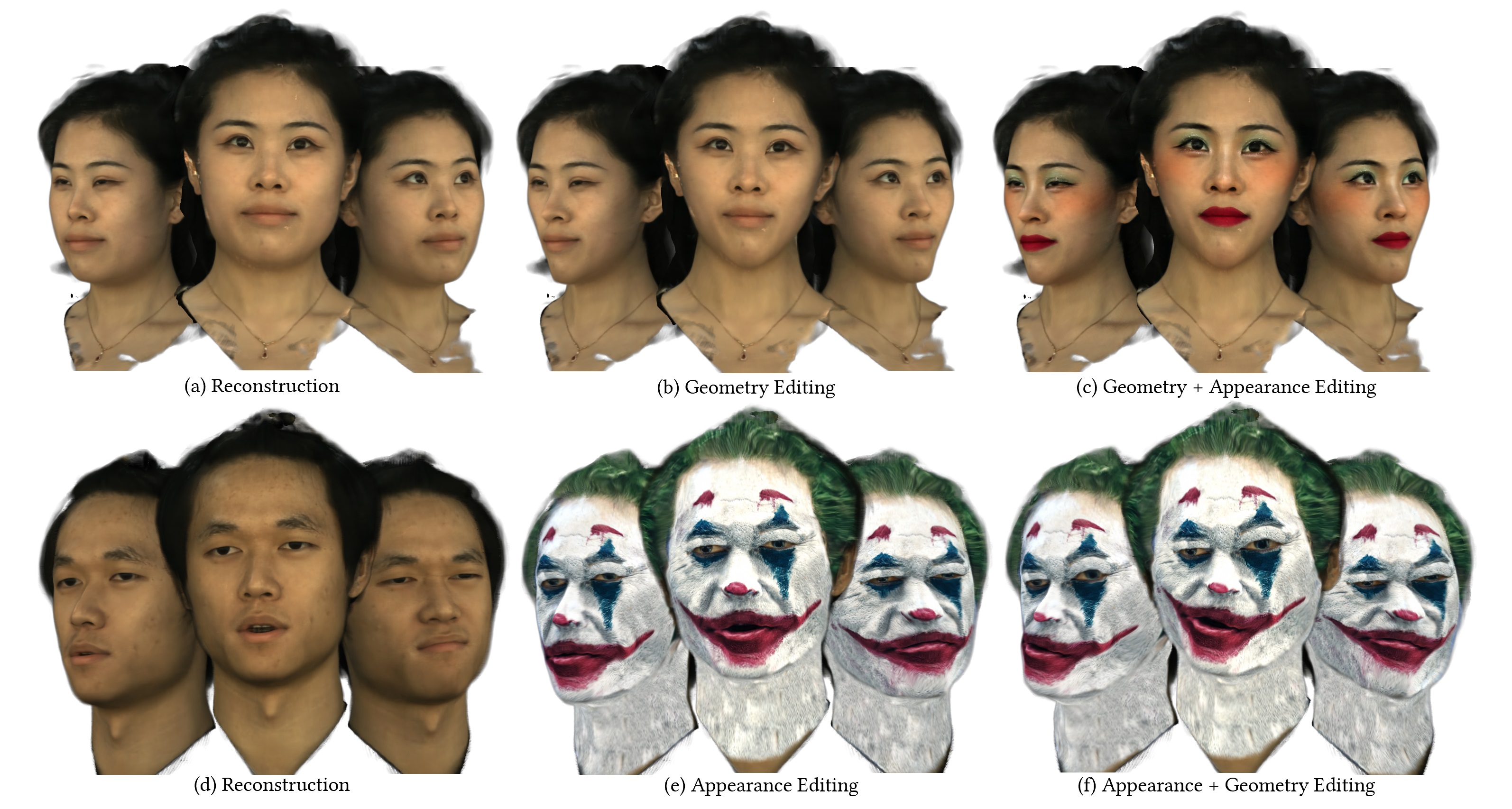

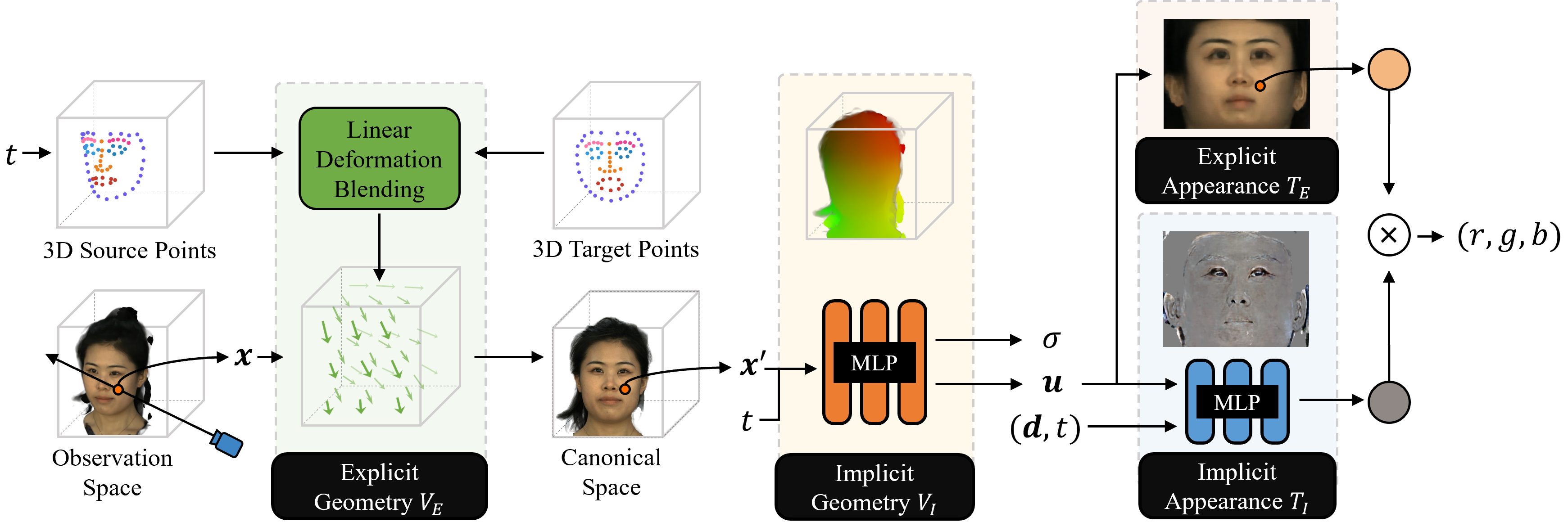

Implicit radiance functions emerged as a powerful scene representation for reconstructing and rendering photo-realistic views of a 3D scene. These representations, however, suffer from poor editability. On the other hand, explicit representations such as polygonal meshes allow easy editing but are not as suitable for reconstructing accurate details in dynamic human heads, such as fine facial features, hair, teeth, and eyes. In this work, we present Neural Parameterization (NeP), a hybrid representation that provides the advantages of both implicit and explicit methods. NeP is capable of photo-realistic rendering while allowing fine-grained editing of the scene geometry and appearance. We first disentangle the geometry and appearance by parameterizing the 3D geometry into 2D texture space. We enable geometric editability by introducing an explicit linear deformation blending layer. The deformation is controlled by a set of sparse key points, which can be explicitly and intuitively displaced to edit the geometry. For appearance, we develop a hybrid 2D texture consisting of an explicit texture map for easy editing and implicit view and time-dependent residuals to model temporal and view variations. We compare our method to several reconstruction and editing baselines. The results show that the NeP achieves almost the same level of rendering accuracy while maintaining high editability.

We disentangle a dynamic radiance field to a geometry representation and appearance representation. The geometry is composed of an explicit deformation field controlled by sparse control points, and an implicit UV and density field that maps any 3D position to a 2D texture coordinate. The appearance is made up of an explicit texture map shared by all the frames, and a MLP that models all the view and time dependent effects on the map.

This video takes around 80MB of data.

@article{ma2022neural,

title={Neural Parameterization for Dynamic Human Head Editing},

author={Ma, Li and Li, Xiaoyu and Liao, Jing and Wang, Xuan and Zhang, Qi and Wang, Jue and Sander, Pedro},

journal={arXiv preprint arXiv:2207.00210},

year={2022}

}